Protecting sensitive data is crucial in the realm of Big Data, where vast amounts of information flow through systems every second. Data tokenization offers a powerful solution to enhance security by replacing sensitive data with unique tokens that hold no intrinsic value. Implementing data tokenization for secure Big Data storage is a strategic way to safeguard valuable information from potential breaches and cyber threats. In this article, we will explore the benefits of data tokenization in the context of Big Data and provide insights on how organizations can effectively leverage this technique to ensure robust data security.

As organizations generate massive volumes of data, ensuring the security and privacy of sensitive information becomes increasingly critical. One effective method for protecting data is through data tokenization. This approach replaces sensitive data with unique identification symbols (or tokens) that retain essential information about the data without compromising its security. In this article, we will explore how to implement data tokenization for secure big data storage.

Understanding Data Tokenization

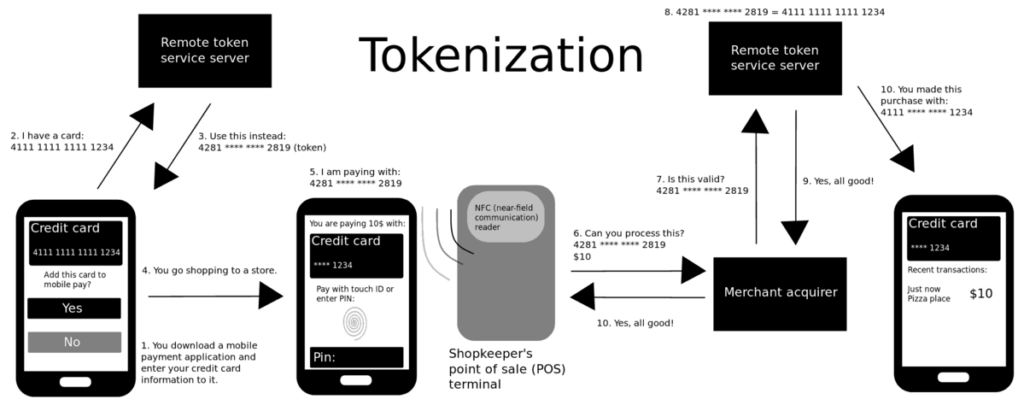

Data tokenization is a process that involves substituting sensitive data elements with non-sensitive equivalents, called tokens. The original data is securely stored in a centralized vault, while the tokens are used for operations that do not require sensitive information. Key features of data tokenization include:

- Increased Security: Tokens are meaningless outside the context of the original data.

- Reduced Compliance Burden: Tokenized data is not subject to the same regulatory requirements as sensitive data.

- Scalability: Tokenization solutions can easily scale as organizations grow and their data volume increases.

Benefits of Data Tokenization in Big Data Storage

Tokenization provides several advantages for organizations handling big data:

- Enhanced Data Privacy: By protecting sensitive information, organizations can ensure customer trust and loyalty.

- Minimized Risk of Data Breaches: In the event of a data breach, tokenized data remains secure, reducing potential damages.

- Regulatory Compliance: Tokenization helps organizations comply with regulations such as GDPR and HIPAA, minimizing fines and legal repercussions.

Steps to Implement Data Tokenization for Big Data

Implementing data tokenization involves several critical steps:

Step 1: Identify Sensitive Data

The first step in implementing tokenization is to identify the types of sensitive data within your big data environment. This can include:

- Personally Identifiable Information (PII)

- Payment Card Information (PCI)

- Health Information

- Confidential Business Information

Utilize data discovery tools to map and categorize data sets effectively.

Step 2: Evaluate Tokenization Solutions

Next, evaluate various tokenization solutions available in the market. Consider factors such as:

- Integration capabilities: Ensure the solution can seamlessly integrate with your existing data architecture.

- Security protocols: Assess the encryption and access controls implemented by the solution.

- Performance impact: Evaluate how tokenization may affect data processing speeds and overall performance.

Step 3: Develop a Tokenization Strategy

Once you’ve selected a solution, develop a comprehensive tokenization strategy. This strategy should encompass:

- Tokenization Method: There are various methods including format-preserving tokenization or deterministic tokenization. Choose the most suitable approach for your needs.

- Access Controls: Define who can access tokens and under what conditions to ensure data is not improperly shared.

- Data Lifecycle Management: Map out how tokens will be handled throughout their lifecycle, from creation to expiration.

Step 4: Implement the Tokenization Process

With the strategy in place, proceed to implement the tokenization process:

- Token Generation: Develop a mechanism for producing tokens securely, ensuring that each token corresponds uniquely to the sensitive data.

- Mapping Tokens to Original Data: Maintain a secure vault system that maps tokens back to their respective original data values.

- Testing: Conduct thorough testing to ensure that the tokenization process does not disrupt business operations.

Step 5: Train Employees on Tokenization Practices

Ensure that employees understand the significance of data tokenization. Provide training on:

- The purpose of tokenization

- The processes involved in handling tokens

- How to report any security concerns

Step 6: Monitor and Audit Tokenized Data

After implementing data tokenization, continuously monitor and audit secured environments to ensure ongoing compliance and security. This involves:

- Regular Security Audits: Periodically evaluate the effectiveness of tokenization strategies and make improvements as necessary.

- Access Log Monitoring: Keep track of who accesses tokenized data and when to detect any unauthorized attempts.

- Compliance Checks: Ensure that your tokenization practices remain aligned with regulatory requirements.

Challenges of Data Tokenization

While tokenization offers numerous benefits, there are challenges to consider:

- Initial Setup Complexity: Setting up a tokenization system can be complex and time-consuming.

- Performance Overhead: Depending on the implementation, tokenization may introduce latency in data processing.

- Managing Token Vaults: The security and availability of token vaults are critical; any compromise can lead to data exposure.

Choosing Between Tokenization and Encryption

Organizations often ponder the choice between tokenization and encryption. While both methods secure sensitive data, they serve different purposes:

- Tokenization: Replaces sensitive data with tokens, but the original data remains in a secure vault. It’s ideal for scenarios requiring frequent access to data without exposing sensitive information.

- Encryption: Scrambles data mathematically. Encrypted data can still be processed in its original form but requires decryption access, which can expose sensitive data if not managed carefully.

Future of Data Tokenization in Big Data

The future of data tokenization is promising. As data privacy regulations tighten and threats to data security increase, organizations must prioritize secure methods of data management. Ongoing advancements in machine learning and AI are expected to enhance tokenization technologies, making them more efficient and easier to implement.

Moreover, with the rise of decentralized data systems, tokenization could play a pivotal role in enhancing the security of data across multiple entities and environments.

Conclusion

Implementing data tokenization for your big data storage strategy is essential in today’s data-driven world. By taking the necessary steps to safeguard sensitive information, organizations not only protect themselves from security breaches and compliance issues but also cultivate a trusted relationship with clients. The journey may be complex, but the benefits are profound and far-reaching.

Implementing data tokenization is a highly effective method to ensure secure big data storage. By replacing sensitive information with unique tokens, organizations can significantly mitigate the risks associated with data breaches and unauthorized access. This approach not only enhances data security but also maintains the integrity and confidentiality of valuable information in the realm of Big Data.