In the realm of Big Data analytics, the identification of outliers plays a crucial role in uncovering hidden insights and anomalies within vast and complex data sets. Detecting outliers in noisy Big Data can be particularly challenging due to the sheer volume and velocity of data being generated. To address this challenge, Artificial Intelligence (AI) techniques offer innovative solutions for efficiently and effectively detecting outliers amidst the noise in Big Data. By leveraging AI algorithms and machine learning models, organizations can enhance their outlier detection capabilities and gain deeper insights into their data. This article will explore the strategies and approaches for performing AI-based outlier detection in noisy Big Data environments.

In today’s data-driven world, organizations generate vast amounts of information daily. Among this data, outliers often hide critical insights. However, detecting these anomalies can be challenging, especially in noisy big data environments. Utilizing AI-based outlier detection methods can significantly enhance your analysis and provide more accurate results. This article outlines essential techniques and tools to effectively perform AI-based outlier detection in noisy big data.

Understanding Outlier Detection

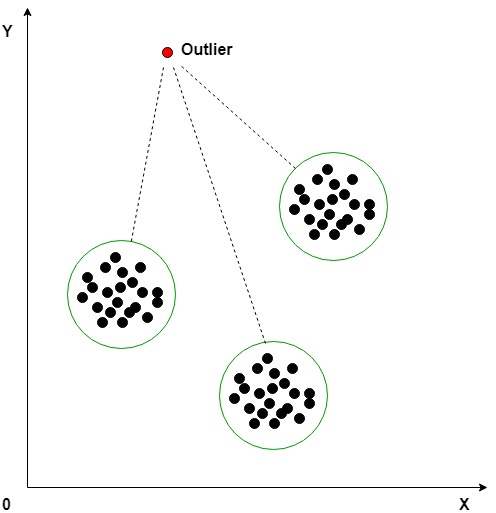

Outlier detection refers to the identification of data points that deviate significantly from the majority of the dataset. These points may indicate errors or unique phenomena. In the context of big data, outliers can skew results and lead to incorrect conclusions. Hence, implementing an effective outlier detection strategy is crucial.

The Challenges of Noisy Big Data

Noisy data contains random errors or variances. This noise can stem from various sources, including:

- Faulty sensors: In IoT scenarios, faulty sensor outputs can introduce anomalies.

- Human errors: Mistakes in data entry can produce misleading results.

- Environmental factors: External conditions can affect data integrity, especially in real-time systems.

In a noisy dataset, distinguishing between true outliers and normal variability becomes particularly difficult. Therefore, leveraging AI techniques enhances accuracy through machine learning and deep learning algorithms, helping automate and refine the process.

The Importance of AI in Outlier Detection

AI and machine learning algorithms can analyze trends and patterns in large datasets that traditional methods might miss. The power of AI lies in its ability to:

- Adapt: AI algorithms can evolve based on new data, improving their outlier detection capabilities over time.

- Process large volumes: AI can efficiently handle and analyze massive datasets that exceed human capabilities.

- Enhance accuracy: Employing advanced algorithms decreases false positives and negatives in anomaly detection.

Methods for AI-Based Outlier Detection

1. Statistical Methods

Statistical techniques rely on probabilistic models to identify outliers. Common approaches include:

- Z-score analysis: Calculates the number of standard deviations a data point is from the mean. A Z-score greater than 3 or less than -3 is often considered an outlier.

- IQR method: This method uses the interquartile range to determine outliers. Points lying below Q1 – 1.5 * IQR or above Q3 + 1.5 * IQR are flagged as outliers.

2. Machine Learning Techniques

Machine learning algorithms can improve outlier detection beyond traditional statistical methods.

i. Supervised Learning

In supervised learning, models are trained on labeled data, helping distinguish between normal and anomalous instances. Tools like Support Vector Machines (SVM) and Decision Trees can be effective in identifying outliers when enough labeled training data is available.

ii. Unsupervised Learning

In contrast, unsupervised learning algorithms operate on unlabelled data, identifying groups of similar data points and marking the remaining ones as outliers. Common unsupervised techniques include:

- K-means Clustering: Cluster data points based on their similarities, and any point that does not fit into a cluster can be flagged as an outlier.

- Isolation Forest: This algorithm isolates anomalies relying on feature selection and can handle high-dimensional data.

3. Deep Learning Approaches

Deep learning techniques can manage complexities and extract features from high-dimensional datasets:

- Autoencoders: These neural networks learn efficient representations of data. By reconstructing input data, they can determine how well new data points fit the learned model. Anomalies typically reconstruct poorly, indicating an outlier.

- Recurrent Neural Networks (RNNs): Useful for time-series data, RNNs can analyze data patterns over time and detect anomalies that deviate from established trends.

Implementing AI-Based Outlier Detection

1. Data Preprocessing

Before applying any machine learning model, effective data preprocessing is essential. This includes:

- Data cleaning: Address missing values, duplicate records, and irrelevant features that may introduce noise.

- Normalization: Scale features to ensure that no single feature dominates the analysis due to varying ranges.

- Encoding: Convert categorical data into numerical formats to be utilized in machine learning algorithms.

2. Feature Engineering

Feature engineering enhances the predictive capabilities of an AI model. Consider:

- Creating derived features: Combining existing features can reveal hidden patterns.

- Dimensionality reduction: Techniques like PCA (Principal Component Analysis) can help simplify the dataset while retaining key information, minimizing noise impact.

3. Model Selection and Training

Based on the nature of the dataset, select the appropriate model. Utilize libraries like:

- scikit-learn: Offers various machine learning algorithms suitable for outlier detection.

- TensorFlow/PyTorch: Ideal for implementing deep learning techniques including autoencoders and RNNs.

Train your model with a relevant dataset, adjusting hyperparameters to optimize performance. Utilize tools like cross-validation to assess model effectiveness.

4. Evaluation Metrics

Evaluating the model’s performance is crucial. Consider using metrics such as:

- Precision and Recall: High precision indicates a low false positive rate, while recall measures the ability to identify outliers.

- F1-Score: A harmonic mean of precision and recall, providing a single metric to gauge performance.

- AUC-ROC: Represents the model’s ability to distinguish between classes.

Best Practices for AI-Based Outlier Detection

- Iterate and Experiment: Data analysis is an ongoing process. Continuously refine models based on new insights or changes in data patterns.

- Ensemble Learning: Combining predictions from multiple models can enhance robustness and reduce false positive rates.

- Deploy Robust Monitoring Tools: Post-deployment, consistently monitor the model’s performance to accommodate real-world fluctuations in data.

Conclusion

By applying the methods described and implementing rigorous testing, organizations can effectively harness AI-based outlier detection, reducing noise and improving decision-making from their big data. Reinforcing the importance of preprocessing, feature engineering, and continuous monitoring can ensure that the outlier detection remains accurate, even as data continues to evolve.

Implementing AI-based outlier detection in noisy big data can significantly enhance the accuracy and efficiency of anomaly detection tasks. By leveraging advanced algorithms and machine learning techniques, organizations can effectively identify and isolate outliers within vast and complex datasets, leading to more informed decision-making and improved data quality. Embracing AI-driven approaches for outlier detection in big data is essential for staying ahead in today’s data-driven landscape.