Processing large-scale sparse data efficiently is crucial in the realm of Big Data analytics. Sparse data refers to datasets where most of the values are empty or zero, resulting in a significant challenge for traditional processing methods due to the sheer volume of data points involved. In order to handle this efficiently, specialized algorithms and frameworks are essential to effectively extract meaningful insights from sparse data sets. In this article, we will explore various strategies and best practices for processing large-scale sparse data efficiently within the context of Big Data analytics.

Understanding Sparse Data in Big Data

Sparse data refers to datasets that contain a significant number of zero or null values. In the context of big data, efficient processing of sparse data is crucial, as many applications in machine learning, recommendation systems, and natural language processing rely on sparse datasets. Examples include user-item interaction matrices, text data represented as term-frequency vectors, and many more.

Challenges of Sparse Data Processing

Processing large-scale sparse data presents unique challenges:

- Storage Efficiency: Sparse data can occupy vast amounts of storage if not handled correctly.

- Computational Complexity: Algorithms might become inefficient, leading to longer processing times.

- Data Representation: Finding the right representation format for sparse data is crucial for maintaining performance.

Best Practices for Efficient Sparse Data Processing

Here are some strategies to ensure efficient processing of large-scale sparse data:

1. Utilize Sparse Matrix Formats

When dealing with sparse data, using appropriate data structures is essential. Some common representations include:

- Compressed Sparse Row (CSR): Efficient for row slicing and matrix-vector products.

- Compressed Sparse Column (CSC): Ideal for column slicing, often used in graph algorithms.

- Dictionary of Keys (DOK): Useful for constructing sparse matrices incrementally.

- Coordinate List (COO): Best for efficiently constructing sparse matrices from sparse data.

2. Leverage Distributed Computing Frameworks

To handle large-scale sparse datasets, consider using distributed computing frameworks such as:

- Apache Spark: Features powerful APIs for working with RDDs and DataFrames; Spark MLlib provides tools for building machine learning algorithms on sparse data.

- Apache Hadoop: Good for batch processing; use MapReduce strategies to efficiently process sparse data.

3. Adopt Efficient Data Algorithms

Opt for algorithms designed specifically for sparse data. For example:

- Stochastic Gradient Descent (SGD): Efficient for training models on large datasets, particularly when dealing with sparse features.

- Matrix Factorization Techniques: Useful in recommendation systems to work with large-scale sparse user-item matrices.

- Feature Selection Algorithms: Algorithms like Lasso regression can help to shrink coefficients of irrelevant features to zero, thus simplifying models while managing sparsity.

4. Use Specialized Libraries

Many libraries and frameworks are optimized for sparse data computations. Consider integrating:

- scikit-learn: Contains efficient algorithms for sparse datasets, with built-in support for sparse matrix formats.

- NumPy: Supports sparse arrays through the SciPy library, providing functions specifically optimized for sparse data.

- Pandas: Offers efficient handling of sparse data types, allowing easy manipulation of datasets.

5. Optimize Memory Usage

Memory consumption can escalate when processing large-scale sparse datasets. To mitigate this, you can:

- Use Memory Mapping: Memory mapping can be an effective way to handle large datasets that may not fit into memory.

- Data Type Optimization: Utilize smaller data types wherever possible (e.g., using

float32instead offloat64). - Batch Processing: Process data in smaller batches instead of attempting to load the entire dataset into memory.

6. Data Preprocessing Techniques

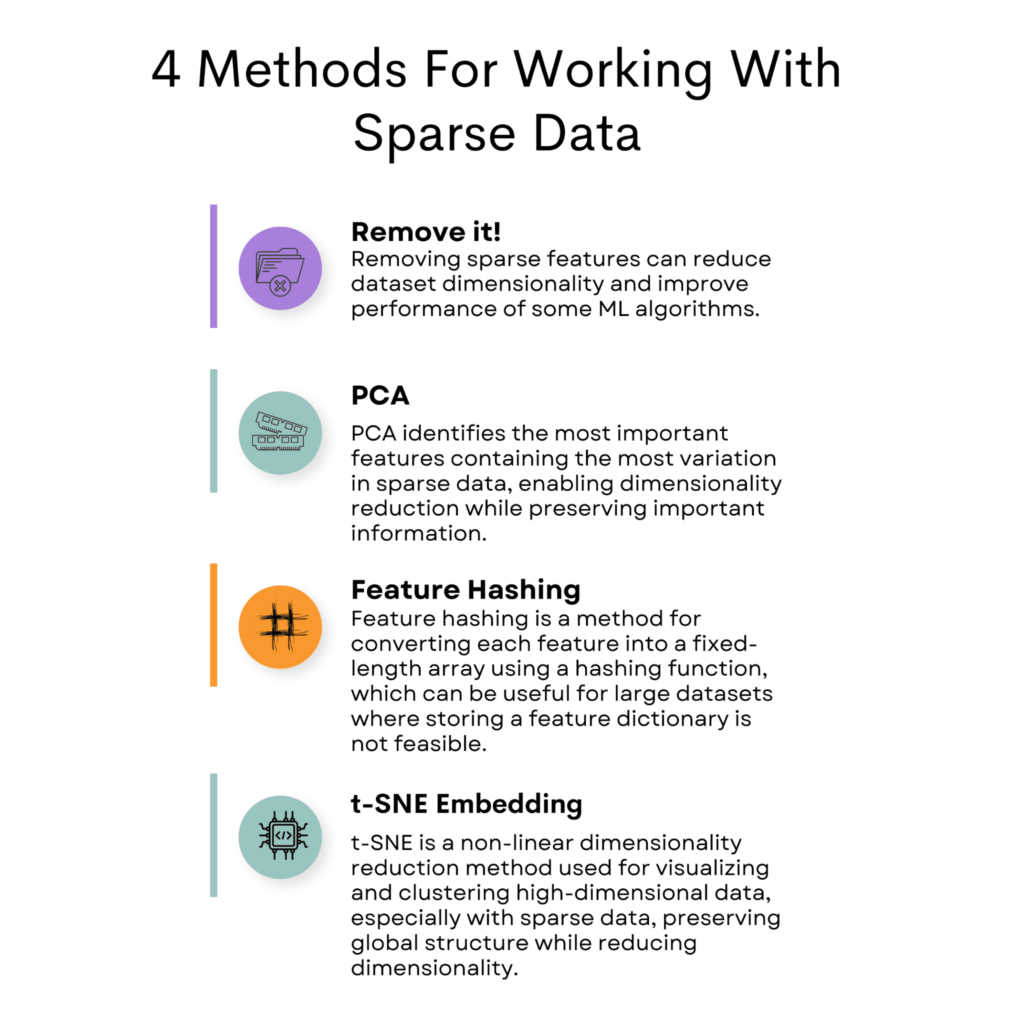

Preprocessing sparse data can greatly enhance the efficiency of further analyses. This can be approached via:

- Normalization: Scaling features to ensure equal weight during processing can help in managing outliers.

- Dimensionality Reduction: Techniques such as Principal Component Analysis (PCA) or Singular Value Decomposition (SVD) can reduce the size of the dataset while preserving essential information.

- Dealing with Missing Values: Employ strategies such as mean imputation or KNN imputation to handle missing data effectively.

Real-World Applications of Sparse Data Processing

Sparse data processing is at the heart of numerous real-world scenarios:

- Recommendation Systems: E-commerce platforms and streaming services utilize sparse user-item matrices to provide personalized content.

- Natural Language Processing (NLP): Text data is often represented as sparse vectors, where techniques such as TF-IDF are employed.

- Image Processing: Many image processing tasks can be framed as sparse problems, such as in edge detection or feature extraction.

Performance Metrics for Sparse Data Processing

When evaluating the performance of your sparse processing pipeline, consider the following metrics:

- Execution Time: Measure the time taken to process the dataset.

- Memory Consumption: Monitor the total memory used during processing to ensure efficiency.

- Model Performance: Assess the accuracy, precision, and recall of your predictive models on sparse datasets.

Conclusion

Performing large-scale sparse data processing efficiently requires a multifaceted approach. By utilizing the right data formats, frameworks, algorithms, and techniques, organizations can significantly enhance their ability to process and derive insights from sparse datasets. Whether you are working in machine learning, recommendation systems, or NLP, these strategies will help you overcome the inherent challenges of sparse data, driving effective results in your big data initiatives.

Efficiently performing large-scale sparse data processing in the realm of Big Data requires a strategic combination of advanced algorithms, distributed computing frameworks, and optimized processing techniques. By leveraging these tools effectively, organizations can unlock valuable insights from sparse data sets while minimizing computational costs and resource utilization. This approach not only streamlines data processing tasks but also enhances overall system performance and scalability, enabling businesses to make informed decisions based on accurate and timely analysis of sparse data.