In the realm of Big Data analysis, harnessing the power of large-scale neural attention mechanisms can unlock pivotal insights from vast troves of data. These cutting-edge technologies leverage the principles of machine learning to enable computers to focus on specific parts of data while processing information, mimicking the human brain’s ability to prioritize and comprehend. By incorporating neural attention mechanisms into data analysis processes, organizations can effectively sift through massive datasets, identify patterns, correlations, and anomalies, and ultimately derive actionable insights to drive informed decision-making. This article delves into the application of large-scale neural attention mechanisms in the Big Data domain, elucidating the significance of this advanced approach and providing insights into how it can revolutionize data analytics for enhanced decision support.

In the realm of Big Data, the ability to extract actionable insights is paramount. One of the most promising methodologies in this landscape is the use of large-scale neural attention mechanisms. These techniques, prevalent in the field of deep learning, allow for improved performance in tasks such as natural language processing, image recognition, and large-scale data analysis.

Understanding Neural Attention Mechanisms

Neural attention mechanisms are inspired by human cognitive attention. They help models focus on specific parts of the input data that are more relevant for the task at hand, allowing them to ignore irrelevant information.

In the context of Big Data, these mechanisms can significantly enhance the model’s ability to process vast amounts of information by dynamically weighting the importance of various data elements. This results in improved model performance, especially for complex datasets.

The Architecture of Attention Mechanisms

There are several architectures for implementing attention mechanisms. The most prominent include:

- Soft Attention: This is a differentiable approach that assigns different weights to different parts of the input data, allowing the model to focus on specific parts of the dataset.

- Hard Attention: Unlike soft attention, hard attention selects specific parts of the input for processing. This method is non-differentiable and often requires reinforcement learning methods for optimization.

- Self-Attention: Also known as intra-attention, this approach considers the relationships between different parts of the same input, enabling the model to draw correlations and dependencies within the data.

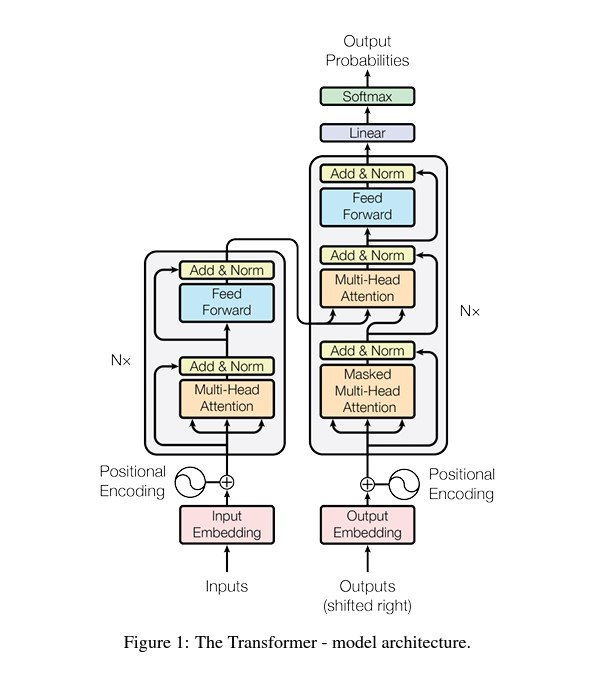

- Multi-Head Attention: This is a variant of self-attention that allows the model to jointly attend to information from different representation subspaces at different positions.

Implementing Attention Mechanisms in Big Data Analysis

To leverage large-scale neural attention mechanisms for data insights, follow these steps:

1. Data Preprocessing

Before applying attention mechanisms, proper preprocessing of your Big Data is crucial. This involves:

- Data Cleaning: Remove duplicates, handle missing values, and filter out noise.

- Data Transformation: Normalize or standardize data values to ensure consistent scales, especially important for neural networks.

- Feature Selection: Identify and select the most relevant features that contribute to the model’s predictions.

2. Model Selection

Choosing the right model architecture is essential. Depending on your specific use case in Big Data, you may consider:

- Transformers: Particularly effective for sequential data, transformers utilize self-attention to better understand context.

- Recurrent Neural Networks (RNNs): While RNNs can be limited by long sequences, integrating attention can enhance their performance.

- Convolutional Neural Networks (CNNs): Often used in image and spatial data, attention mechanisms can augment CNNs by focusing on specific features within images.

3. Model Training

Training your model with a focus on attention mechanisms requires careful consideration of the following:

- Loss Functions: Utilize appropriate loss functions that align with your business objectives. For regression tasks, Mean Squared Error (MSE) may be ideal, while classification could use Cross-Entropy Loss.

- Hyperparameter Tuning: Engage in systematic tuning of hyperparameters like learning rates, number of attention heads, and layer sizes to enhance model performance.

- Regularization Techniques: Employ techniques such as dropout or weight decay to prevent overfitting.

Using Attention for Feature Importance Insights

One of the significant advantages of attention mechanisms is their ability to provide insights into feature importance. By analyzing the attention weights during the model inference process, you can:

- Identify Key Features: Understand which features have the most influence on the model’s predictions.

- Enhance Model Interpretability: Provide stakeholders with clearer insights and rationale behind model decisions.

- Guide Feature Engineering: Use the insights from attention weights to refine and develop new features that could improve model performance.

Deploying Attention-Enhanced Models

After training and analyzing your attention-enhanced model, proper deployment in a Big Data environment is vital. Key considerations include:

- Scalability: Ensure your model can handle large datasets in real-time. Utilize distributed computing platforms such as Apache Spark or Kubernetes.

- Latency Optimization: Optimize model inference times to meet real-time processing requirements.

- Monitoring and Maintenance: Implement monitoring tools to track model performance and flag any declines or drifts in accuracy over time.

Tools and Technologies for Implementing Neural Attention Mechanisms

Numerous tools and frameworks are available to assist with implementing neural attention mechanisms in Big Data. Some of these include:

TensorFlow: A robust open-source platform that facilitates building and training deep learning models, including attention architectures.

PyTorch: Known for its easy debugging and dynamic computation graph, PyTorch is particularly popular for research and development of attention mechanisms.

Keras: A user-friendly API built on top of TensorFlow, Keras simplifies the building of neural networks and offers layers specifically for attention.

Hugging Face Transformers: This library provides pre-trained transformer models, making it easier to apply attention mechanisms to various NLP tasks.

Apache Spark MLlib: For large-scale data processing, MLlib can be used to integrate machine learning models with attention for distributed computing.

Challenges in Using Attention Mechanisms for Big Data Insights

While attention mechanisms offer incredible advantages, several challenges must be addressed:

- Computational Cost: Training large models with attention can be resource-intensive. High-performance computing resources may be required.

- Overfitting Risk: Without proper regularization, attention models can overfit on smaller datasets. Monitoring and validation strategies are crucial.

- Difficult Interpretability: Although attention mechanisms can provide some insights into model behavior, they can also introduce complexity that may hinder interpretability.

Future Trends in Attention Mechanisms for Big Data

The field of large-scale neural attention mechanisms is rapidly evolving. Emerging trends include:

- Hybrid Models: Combining attention mechanisms with traditional models to harness the strengths of both methodologies.

- Multimodal Processing: Developing attention models capable of processing different types of data (text, images, audio) simultaneously to derive richer insights.

- Efficient Transformers: Continued research into optimizing transformer architectures for efficiency, reducing the computational burden while maintaining performance.

By harnessing the power of large-scale neural attention mechanisms, organizations can gain deeper insights from their data, gain a competitive edge, and make more informed decisions in the age of Big Data.

Leveraging large-scale neural attention mechanisms for data insights in the realm of Big Data offers a powerful way to extract meaningful and actionable patterns from vast and complex datasets. By employing attention mechanisms, organizations can enhance their data analysis capabilities, improve decision-making processes, and uncover valuable insights that drive innovation and competitive advantage in today’s data-driven landscape.