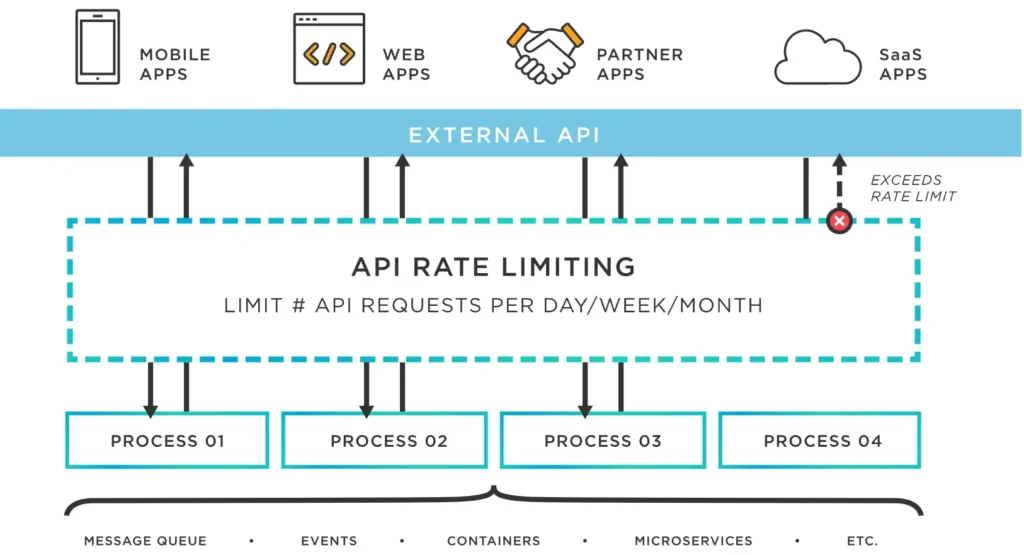

API consumer rate limits are restrictions set by API providers to control the number of requests that can be made by a consumer within a certain amount of time. This is important to prevent abuse, ensure fair usage, and maintain the overall performance and availability of the API. Implementing rate limits usually involves defining limits based on criteria such as the number of requests per second, minute, or hour, identifying unique consumers using API keys or tokens, and handling any exceeding requests with appropriate error responses (such as HTTP status code 429 – Too Many Requests). Rate limiting can be implemented directly in the API code or through API management tools and services, offering flexibility in configuration and monitoring options. By effectively managing rate limits, API providers can strike a balance between providing access to their services and protecting their systems from potential misuse or overload.

Understanding API Rate Limiting

APIs, or Application Programming Interfaces, play a crucial role in enabling applications to communicate with each other. However, with great power comes great responsibility. This is where the concept of API consumer rate limits comes into play. Rate limiting is a technique used to control the amount of incoming and outgoing traffic to a specific API endpoint. It prevents abuse and ensures fair usage for all consumers.

Rate limits are important for protecting server resources and ensuring that no single user consumes an undue amount of bandwidth or processing power. In this article, we will explore the intricacies of API consumer rate limits and how to implement them effectively.

Why Implement API Consumer Rate Limits?

Implementing API consumer rate limits offers several benefits, including:

- Preventing Abuse: By limiting the number of requests a consumer can make, you can minimize the risk of abusive behavior, such as DDoS attacks or rapidly querying the system to extract large amounts of data.

- Ensuring Fair Usage: Rate limits promote fair resource distribution among consumers. This is particularly vital when multiple users or applications share the same API.

- Improving Stability: Limiting the rate of incoming requests helps maintain server stability, performance, and response times even during peak loads.

- Enhancing Security: Rate limiting can serve as an additional security layer, helping to mitigate exploitation of vulnerabilities in the API.

Key Concepts of API Rate Limiting

To effectively implement API consumer rate limits, it is vital to understand the key concepts involved:

1. Rate Limit Window

The rate limit window refers to the time frame within which requests are counted. Common windows include:

- Per Minute: A set number of requests allowed every minute.

- Per Hour: A specified number of requests allowed in an hour.

- Per Day: A defined limit for the entire day.

2. Allowed Requests

Each rate limit window defines the maximum number of requests an API consumer can make. This could vary based on the consumer’s tier of service or use case. For example, a free tier might allow 100 requests per hour, while a premium tier could allow 1000 requests per hour.

3. Rate Limit Headers

APIs typically communicate rate limit information through HTTP headers. Common headers include:

- X-RateLimit-Limit: The maximum number of requests allowed within a window.

- X-RateLimit-Remaining: The number of requests remaining in the current window.

- X-RateLimit-Reset: The time (in UTC epoch seconds) when the rate limit will reset.

4. Throttling and Banning

When consumers exceed their rate limit, APIs may respond in various ways, such as throttling their requests by returning HTTP status code 429 (Too Many Requests). In severe cases of abuse, APIs may choose to permanently ban the offending user too.

Methods for Implementing API Consumer Rate Limits

Implementing API consumer rate limits can be achieved through several methods:

1. Token Bucket Algorithm

The Token Bucket Algorithm allows consumers to accumulate a certain amount of tokens, where each request deducts a token. Tokens refill at a specified rate, allowing for bursts of requests. This mechanism provides flexibility for users who may not need to consume their entire quota at once.

2. Leaky Bucket Algorithm

In the Leaky Bucket Algorithm, requests are processed at a constant rate, regardless of how many requests are submitted. This provides predictable API performance, preventing excessive bursts from overwhelming the server, while enforcing a strict rate limit.

3. Fixed Window

The Fixed Window method allows a set number of requests during a specified time frame. Once the limit is reached, subsequent requests are blocked until the next window begins. While simple to implement, it may lead to spikes in usage at the start of each window.

4. Sliding Window

Using the Sliding Window technique, the rate limits are continually updated based on the actual time of requests. This approach combines benefits of both the Fixed and Token Bucket algorithms, offering a more refined mechanism for controlling usage.

Best Practices for API Rate Limiting

Here are some best practices to consider when implementing API consumer rate limits:

1. Define Clear Rate Limits

Establish well-defined and transparent rate limits for your API consumers. This is crucial for maintaining trust and allowing users to plan their usage accordingly.

2. Monitor Usage Patterns

Continuously monitor API usage patterns. This data can help in adjusting rate limits over time based on actual usage, ensuring optimal performance and user satisfaction.

3. Provide Rate Limit Information

Always provide real-time feedback regarding the rate limits in the response headers. This transparency enables API consumers to manage their requests effectively.

4. Implement Graceful Error Handling

When a consumer exceeds the rate limit, return HTTP 429 errors and include meaningful messages in response bodies. This helps users understand why their requests are being blocked and encourages them to adjust their behavior.

5. Offer Tiered Access

Consider implementing tiered access levels for different types of consumers. By offering premium tiers with higher rate limits, you can monetize your API effectively while ensuring that critical users receive necessary bandwidth.

Examples of Rate Limit Implementation

Below are some examples of how different APIs implement consumer rate limits:

1. GitHub API

The GitHub API has a rate limit policy for public access at 60 requests per hour, while authenticated requests increase the limit to 5000 requests per hour. GitHub provides all relevant rate limit information in response headers.

2. Twitter API

The Twitter API utilizes a token bucket algorithm to manage different endpoints with varied limits. Specific endpoints could have unique rules, such as 15 requests every 15 minutes for certain actions.

3. Google Maps API

The Google Maps API implements a more complex rate limiting mechanism. Users are billed based on usage, with a pre-defined number of free calls provided before additional costs are incurred.

Conclusion

By understanding and implementing API consumer rate limits, developers can secure their API resources, ensure fair usage among consumers, and maintain optimal system performance. Given the growing reliance on APIs in modern application development, having effective rate limiting mechanisms in place is not just recommended; it is essential for a robust API strategy.

An API consumer rate limit is a mechanism that controls the rate at which an API consumer can make requests to an API. Implementing a rate limit is crucial for maintaining the overall performance, reliability, and security of an API. By setting and enforcing appropriate rate limits, API providers can prevent abuse, ensure fair usage, and optimize the overall API experience for all users. It is typically implemented using techniques such as API keys, throttling, and usage quotas. Overall, API rate limiting is a fundamental aspect of API management that helps balance the needs of consumers and providers in the increasingly interconnected digital landscape.