API latency, or response time delay, can significantly impact the performance and user experience of APIs and web services. Implementing effective latency reduction strategies is crucial for improving the speed and efficiency of data communication between different systems. In this guide, we will explore various techniques and best practices to reduce API latency, optimize response times, and enhance the overall performance of APIs and web services. By leveraging these strategies, developers and organizations can ensure that their APIs deliver fast, reliable, and responsive experiences to users.

When developing applications that rely on APIs and Web Services, one of the most critical performance metrics to consider is latency. High latency can degrade user experience and lead to lost opportunities. This article explores effective strategies for reducing API latency and improving the performance of your web services.

Understanding API Latency

API latency refers to the time it takes for an API request to travel from the client to the server and back. It encompasses multiple factors, including network transmission time, server processing time, and data preparation time. Understanding the sources of latency is essential to implement effective reduction strategies.

1. Optimize API Design

Well-designed APIs are crucial for minimizing latency. Here are some practices to consider:

1.1 Use RESTful Principles

Implementing RESTful APIs can often lead to lower latency because of their statelessness and cacheable nature. By adhering to these principles, you can enhance performance:

- Stateless Operations: Each request from the client should contain all the information needed to process it.

- Resource-Based URLs: Ensure that your API uses meaningful endpoints that lead to faster routing.

1.2 Employ Pagination and Filtering

When working with large datasets, ensure your API supports pagination and filtering. This reduces the amount of data transferred over the network, minimizing latency:

- Pagination: Break large responses into smaller chunks.

- Filtering: Allow clients to request only the necessary data, reducing load time.

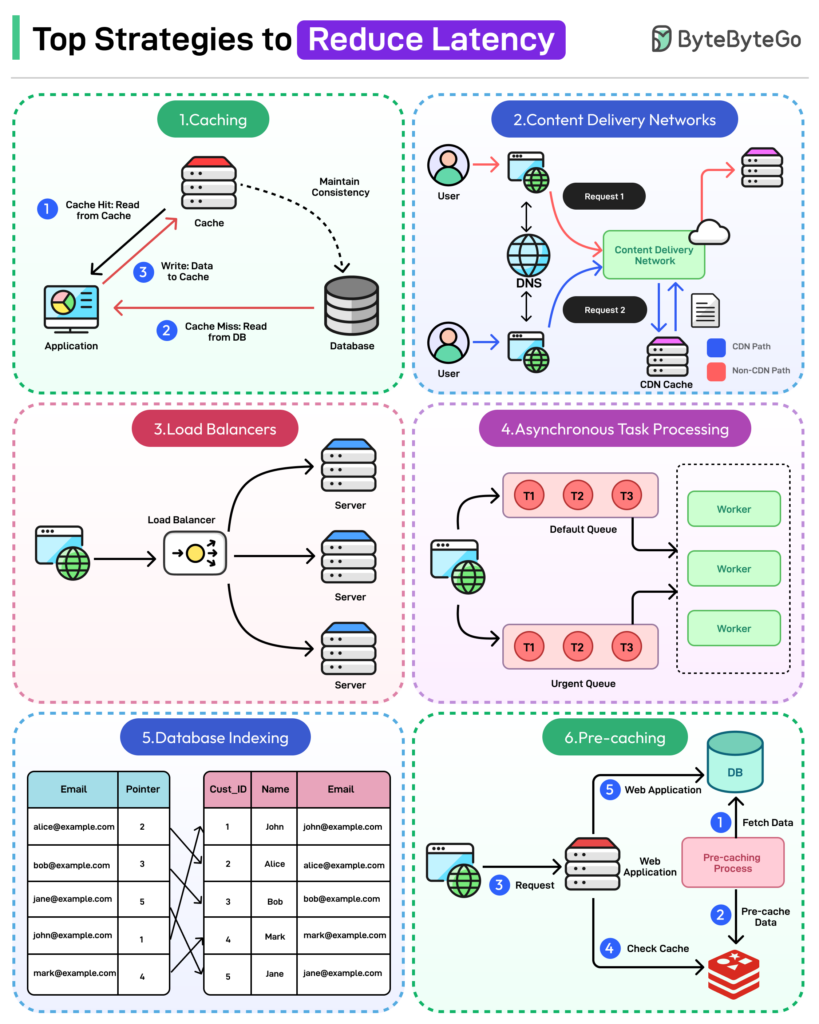

2. Utilize Caching Mechanisms

Implementing caching strategies is one of the most effective ways to reduce latency:

2.1 Client-Side Caching

Encourage clients to cache responses from your API. By storing previously fetched data, you can significantly reduce the number of requests made to the server:

- HTTP Cache Headers: Use appropriate cache headers to control how caches behave.

- ETag and Last-Modified: Implement these headers to help clients validate cached responses.

2.2 Server-Side Caching

Implement caching mechanisms on the server side to reduce the processing time for frequently requested data:

- Memory Caching: Utilize in-memory stores like Redis or Memcached to keep often-used data readily available.

- Database Caching: Optimize database queries and cache query results where applicable.

3. Optimize Network Performance

Network performance is a significant contributor to API latency. Here are some strategies to improve it:

3.1 Content Delivery Networks (CDNs)

Employing a Content Delivery Network (CDN) can help reduce latency by caching responses closer to the end-user:

- Global Distribution: CDNs have servers located worldwide, decreasing the physical distance data has to travel.

- Static Resource Caching: Use CDNs to serve static resources (such as images, stylesheets, and scripts), freeing up your server’s bandwidth.

3.2 Optimize Data Transfer

Minimize the volume of data transmitted over the network:

- Compression: Use Gzip or Brotli compression to reduce payload sizes.

- Minification: Minify JSON and other data formats to streamline data transfer.

4. Employ Asynchronous Processing

Asynchronous processing can greatly improve perceived latency:

4.1 Background Job Processing

For time-consuming tasks, consider offloading them to background processes:

- Use message queues like RabbitMQ or Apache Kafka to handle tasks without blocking the client.

- Implement webhooks for event-based responses, allowing clients to be notified when their data is ready.

5. Monitor and Analyze API Performance

Constant monitoring is vital for maintaining low latency:

5.1 Use Application Performance Monitoring (APM) Tools

Implement APM tools to keep tabs on your API’s performance:

- Track metrics such as average response time, error rates, and throughput.

- Utilize tools like New Relic or Datadog to gain insights into performance bottlenecks.

5.2 Evaluate Response Time and Latency

Establish benchmarks for acceptable latency and regularly review performance data:

- Use analytics to track how latency impacts user satisfaction and engagement.

- Adjust your strategies based on data-driven insights.

6. Scale Infrastructure Appropriately

As your application grows, scaling your infrastructure becomes essential:

6.1 Load Balancing

Implement load balancers to distribute traffic evenly across servers:

- Horizontal Scaling: Add more servers to handle incoming requests.

- Application Delivery Controllers (ADCs): Use these devices to optimize load distribution and reduce latency.

6.2 Use Microservices Architecture

Consider breaking down your monolithic API into microservices:

- Separating functionalities can improve scalability and reduce the load on individual services.

- Each microservice can be optimized for its specific task, leading to enhanced performance.

7. Optimize Backend Performance

Finally, backend optimizations can significantly affect API latency:

7.1 Database Optimization

Improve database performance by:

- Using indexing to speed up query performance.

- Continuously reviewing and optimizing SQL queries.

7.2 Use of Efficient Data Formats

Select the most efficient data formats for your API:

- JSON and Protobuf: While JSON is widely used, consider using Protocol Buffers (Protobuf) for a more efficient data serialization format.

- Binary Formats: Consider binary formats for transferring large datasets or high-frequency updates.

By implementing these strategies, you can significantly reduce API latency, leading to improved performance of your applications. Understanding the various contributing factors allows you to tailor solutions based on your specific needs, ensuring that your APIs provide an optimal experience for your users.

Implementing API latency reduction strategies is essential for optimizing performance and enhancing user experience in APIs & Web Services. By incorporating techniques such as caching, load balancing, optimizing database queries, using efficient data formats, and leveraging CDNs, organizations can significantly reduce latency and improve overall API performance. Prioritizing latency reduction helps to increase responsiveness, scalability, and reliability, ultimately leading to enhanced customer satisfaction and competitive advantage in the digital landscape.