In the realm of Big Data, the proliferation of large-scale models has brought to light the issue of bias that can pervade these complex systems. Addressing this challenge, Artificial Intelligence (AI) is emerging as a key player in reducing bias and promoting fairness within Big Data models. By leveraging advanced algorithms and machine learning capabilities, AI can analyze vast amounts of data to identify and mitigate biases that may impact decision-making processes. This article explores the pivotal role that AI plays in addressing bias within large-scale Big Data models, ultimately paving the way for more ethical and equitable data-driven outcomes.

The integration of Artificial Intelligence (AI) in the realm of big data has become increasingly significant, especially when addressing the critical issue of bias. Large-scale big data models often reflect the prejudices inherent in the datasets used to train them, leading to skewed insights and unethical applications. In this article, we delve into how AI can mitigate bias in big data models and enhance their fairness and accuracy.

Understanding Bias in Big Data Models

Bias in big data refers to systematic errors that lead to unfair outcomes in predictions or decisions made by data-driven systems. These biases can stem from various sources, including:

- Data Collection Bias: This occurs when the data collected is not representative of the intended population.

- Labeling Bias: Bias can also arise from the subjective labeling of data, such as categorizing information based on human judgment.

- Algorithmic Bias: Algorithms themselves may perpetuate bias if they are designed without considering fairness or inclusivity.

Identifying and addressing these biases is crucial, as they can lead to discrimination in areas such as hiring practices, law enforcement, and healthcare.

AI Techniques for Bias Mitigation

AI offers several methods and techniques to identify, measure, and reduce bias in big data models:

1. Pre-processing Techniques

Pre-processing involves adjusting the training data before it is used to build a model. Techniques include:

- Data Augmentation: This involves creating synthetic data to balance the dataset and ensure underrepresented groups are adequately represented.

- Reweighing: Assigning different weights to various data samples can help in balancing the influence of each group in the model.

- Data Cleaning: Removing biased samples from the dataset prevents skewed outcomes.

2. In-processing Techniques

These techniques focus on modifying the learning algorithm during model training:

- Fairness Constraints: By integrating fairness constraints directly into the machine learning model, developers can ensure that the outcomes do not disproportionately favor one group over another.

- Adversarial Training: This approach trains a secondary model to identify and mitigate biases while the primary model is being trained, enhancing overall fairness.

3. Post-processing Techniques

Once a model is trained, post-processing techniques further refine its predictions:

- Threshold Adjustment: Modifying decision thresholds for different groups can help equalize outcomes.

- Reallocation of Predictions: Adjusting the final predictions based on fairness criteria can help ensure parity across groups.

AI Algorithms for Bias Detection

Identifying bias in datasets and models is just as vital as mitigating it. Some AI algorithms designed for bias detection include:

- Fairness Metrics: These metrics evaluate the algorithm’s performance across different demographics, quantifying any existing bias.

- Data Visualization Techniques: Visual analytics can help uncover hidden trends and patterns in the data that might lead to biased decisions.

- Explainable AI (XAI): XAI methodologies help clarify how decisions are made by AI models, providing insights into whether biases may be influencing outcomes.

Real-world Applications of AI in Reducing Bias

The application of AI in reducing bias can be observed across various sectors:

1. Human Resources

In recruitment processes, AI can be used to screen resumes and assess candidates. However, traditional algorithms often perpetuate biases. By utilizing AI-driven tools that employ fairness metrics and pre-processing techniques, organizations can create a more equitable hiring process that evaluates candidates based solely on merit.

2. Law Enforcement

AI is increasingly being applied in predictive policing. However, if not managed correctly, these systems could disproportionately target specific communities. Implementing bias detection algorithms and fairness constraints can help ensure that these systems function justly, promoting community trust.

3. Healthcare

In healthcare, biased data can lead to disparities in treatment recommendations. AI systems that account for demographic diversity in their datasets can improve health outcomes by providing equitable treatment options tailored to the needs of all patients.

Challenges in Implementing AI for Bias Reduction

While AI holds great promise for reducing bias in big data models, several challenges remain:

1. Data Quality

The effectiveness of AI in combatting bias hinges significantly on the quality of the data. Inaccurate, incomplete, or outdated data can lead to misguided insights and reinforce existing biases.

2. Complexity of Bias

Bias is multifaceted and context-dependent, making it challenging to craft one-size-fits-all solutions. What may work in one scenario might not be applicable in another.

3. Ethical Considerations

Implementing AI solutions raises ethical questions related to transparency, accountability, and the potential for new forms of bias. Organizations must navigate these complexities carefully to avoid unintended consequences.

The Future of AI in Bias Mitigation

Looking ahead, AI technologies have the potential to play a pivotal role in advancing bias reduction strategies. Innovations such as:

- Federated Learning: This technique allows models to learn from decentralized data while maintaining data privacy, potentially leading to less biased outcomes.

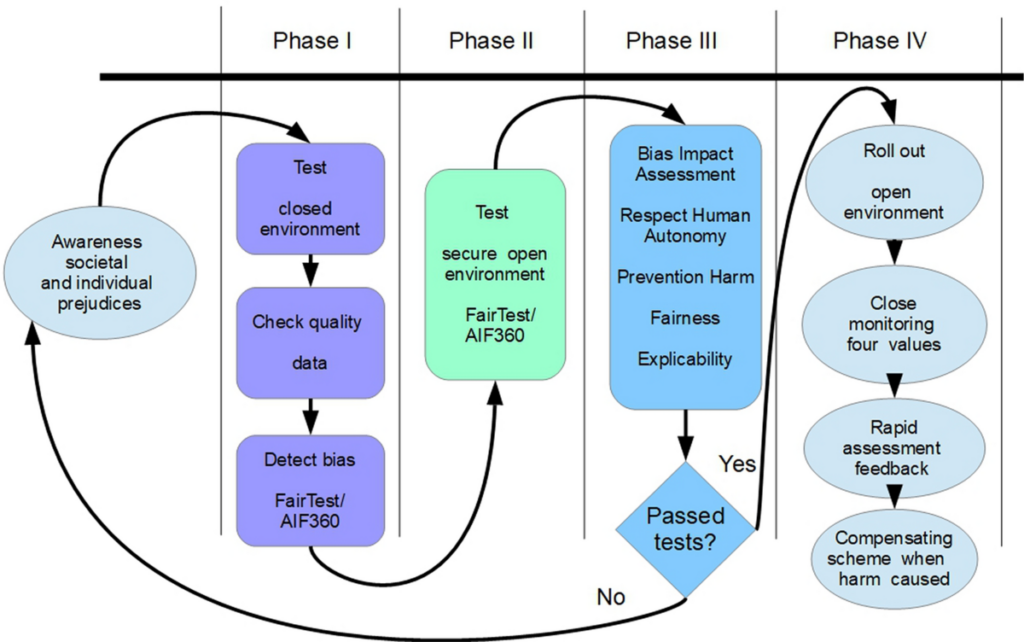

- Continuous Monitoring Systems: Real-time monitoring of AI models can detect biases as they emerge and allow for prompt corrective actions.

- Collaborative AI: Encouraging collaboration between data scientists, ethicists, and domain experts will enhance the understanding of biases and refine mitigation strategies.

As organizations increasingly rely on AI to make critical decisions based on big data, the need for unbiased models will grow. Recognizing and implementing strategies today will pave the way for a more equitable future across all sectors.

In summary, the role of AI in reducing bias in large-scale big data models is transformative. Through effective techniques and ethical considerations, AI can help build more inclusive and just systems, enabling organizations to harness the true potential of big data while minimizing the risks associated with bias.

AI plays a crucial role in mitigating bias within large-scale Big Data models by enabling the development of more robust and fair algorithms. By leveraging AI technologies such as machine learning and natural language processing, organizations can enhance data quality, increase transparency, and promote diversity, ultimately advancing towards more equitable and unbiased decision-making processes in the realm of Big Data.