Apache Arrow is a leading technology in the realm of high-performance data processing, specifically geared towards Big Data applications. With an emphasis on efficiency and speed, Apache Arrow is designed to streamline data transfer and analytics across various computing platforms, enabling seamless communication and processing of large datasets. By providing a standardized in-memory format for data interchange, Apache Arrow minimizes overhead and maximizes performance, making it an essential tool for organizations looking to harness the power of Big Data for advanced analytics and insights.

Apache Arrow is a cross-language development platform designed for in-memory data, playing a crucial role in the world of high-performance data processing for big data applications. Its ability to handle large datasets efficiently has made it integral to modern architectures that require speed and scalability. In this article, we explore how Apache Arrow enhances data processing performance and its relevance in the big data ecosystem.

Understanding Apache Arrow

Apache Arrow provides a columnar memory format optimized for analytical workloads. Unlike traditional row-based data structures, Arrow’s columnar format enables better CPU cache utilization and promotes vectorized processing, leading to significant performance improvements. The key characteristics of Arrow include:

- Cross-Language Compatibility: Apache Arrow supports numerous programming languages, including Python, R, Java, and C++, making it accessible for a wide range of data professionals.

- Zero-Copy Reads: As data is stored in Arrow’s format, applications can directly access the memory without serialization or deserialization overhead. This feature drastically reduces latency.

- Efficient Data Serialization: Arrow’s streamlined serialization enables faster sharing of data between applications, a critical factor in big data environments.

Performance Benefits of Apache Arrow in Big Data

The integration of Apache Arrow brings several performance benefits, particularly in the context of big data:

1. Improved Data Processing Speed

By utilizing Arrow’s in-memory columnar format, analytical queries, especially those involving complex computations, can be executed significantly quicker. The vectorized processing capabilities allow for operations on entire columns rather than individual rows, which enhances performance benchmarks in big data computations.

2. Enhanced Interoperability

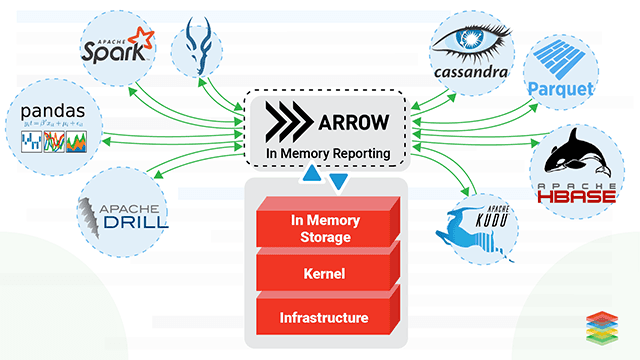

Big data systems often comprise various tools and technologies. Apache Arrow acts as a shared memory layer that enables seamless data interchange between different processing frameworks such as Apache Spark, Pandas, and Apache Parquet. This interoperability reduces the need for repeated data transformation, thus minimizing performance bottlenecks.

3. Streamlined Workflows

In environments where data flows through multiple processing engines, data transformation can be a significant overhead. Arrow’s formats reduce this overhead, enabling streamlined ETL (Extract, Transform, Load) workflows. By supporting modern data lakes and streams, Arrow enhances overall system throughput.

How Apache Arrow Integrates with Popular Big Data Frameworks

Apache Spark

Apache Spark is one of the most popular big data processing frameworks. The integration of Arrow with Spark allows for efficient data exchange between Spark and Python using Pyspark. This integration effectively speeds up the execution of Spark jobs through the two critical features:

- Arrow-Based DataFrames: Arrow enables the use of Spark DataFrames, significantly speeding up the process of converting Spark DataFrames to Pandas DataFrames and vice versa.

- Serialization Efficiency: With Arrow, data can be serialized efficiently, further reducing the time taken to execute Spark jobs compared to previous methods.

Apache Drill

Apache Drill is a schema-free SQL query engine for big data exploration. By leveraging Apache Arrow, Drill can efficiently handle data from various sources such as HDFS, HBase, and cloud storage. The integration allows Drill’s users to:

- Achieve Real-Time Query Performance: Arrow speeds up data access and query response times, providing a more interactive experience for users exploring large datasets.

- Facilitate Aggregations and Joins: The columnar format of Arrow supports faster aggregations and joins, critical for analytics over large datasets.

Use Cases of Apache Arrow in Big Data Environments

1. Real-Time Analytics

Apache Arrow excels in scenarios requiring real-time analytics. The efficiency of data retrieval combined with low-latency processing allows data scientists to derive insights quickly, essential for applications like fraud detection and monitoring.

2. Machine Learning

Big data environments are increasingly used for machine learning applications. Apache Arrow provides the necessary infrastructure to handle large datasets efficiently, enabling faster training and inference times. Libraries such as TensorFlow and Apache MLlib can benefit from the rapid data access and manipulation capabilities of Arrow.

3. Streaming Data Processing

In the realm of streaming data applications, Apache Arrow plays a vital role in optimizing the performance of frameworks like Apache Kafka and Apache Flink. By using Arrow’s in-memory structures, streaming jobs can process data on-the-fly, resulting in reduced latencies and improved throughput.

Key Features of Apache Arrow

Apache Arrow offers a variety of features that enhance its contribution to high-performance data processing:

- Memory Efficiency: Arrow’s columnar memory format reduces the amount of memory required for analytics tasks and improves cache locality, which is critical when dealing with large datasets.

- Support for Complex Data Types: Arrow natively supports complex data structures, including nested and list types, facilitating the processing of diverse data formats commonly found in big data.

- Streaming Capability: Arrow’s design accommodates both batch and stream processing models, providing versatility across various data handling scenarios.

Challenges and Considerations

While Apache Arrow presents numerous advantages for big data processing, it also faces certain challenges:

1. Adoption and Learning Curve

Although Arrow supports various programming languages, its adoption may require teams to familiarize themselves with its architecture and API. Training and resources to ease this learning curve are essential for effective implementation.

2. Compatibility with Existing Tools

As Apache Arrow aims to connect various data tools, ensuring compatibility and stability across all versions can be a challenge. Organizations need to maintain updates and best practices to leverage Arrow effectively.

3. Limited Use Cases in Traditional OLTP Systems

While Arrow thrives in analytical and big data scenarios, its use in traditional Online Transaction Processing (OLTP) systems remains limited. Organizations must consider their data architecture and processing needs before adopting Apache Arrow as a central technology.

Conclusion

Apache Arrow is transforming the landscape of high-performance data processing in big data environments. By leveraging its cross-language capabilities, in-memory columnar format, and efficient serialization processes, organizations can achieve faster data processing, better resource utilization, and improved interoperability among big data tools. As data continues to grow in volume and complexity, technologies like Apache Arrow will be vital in ensuring that businesses can process and derive meaningful insights in real-time.

Apache Arrow plays a critical role in enabling efficient and high-performance data processing in the realm of Big Data. By providing a standardized in-memory data format and efficient data interchange, Arrow facilitates seamless communication and processing across various Big Data tools and platforms, ultimately enhancing overall performance and scalability of data processing tasks. Its ability to optimize memory utilization and minimize data movement overhead makes Apache Arrow a valuable asset in modern Big Data environments, empowering organizations to unlock new levels of speed and efficiency in their data processing workflows.