Data preprocessing plays a crucial role in the realm of Big Data and artificial intelligence (AI) by laying the foundation for accurate and efficient data analysis. As the volume, velocity, and variety of data continue to increase exponentially, the need to preprocess and clean data before analysis becomes even more apparent. In the context of Big Data, preprocessing involves tasks such as data cleaning, normalization, transformation, and feature selection, all of which are essential for enhancing the quality and reliability of the data. By effectively preparing and preprocessing data, organizations can derive valuable insights, make informed decisions, and ultimately unlock the potential of Big Data in driving business growth and innovation.

Data preprocessing is a critical step in the big data lifecycle, especially when it comes to artificial intelligence (AI) applications. It involves the transformation of raw data into a clean dataset, which is essential for successful data analysis and modeling. In the realm of big data and AI, effective data preprocessing can enhance the quality of the insights produced and significantly impact the performance of algorithms.

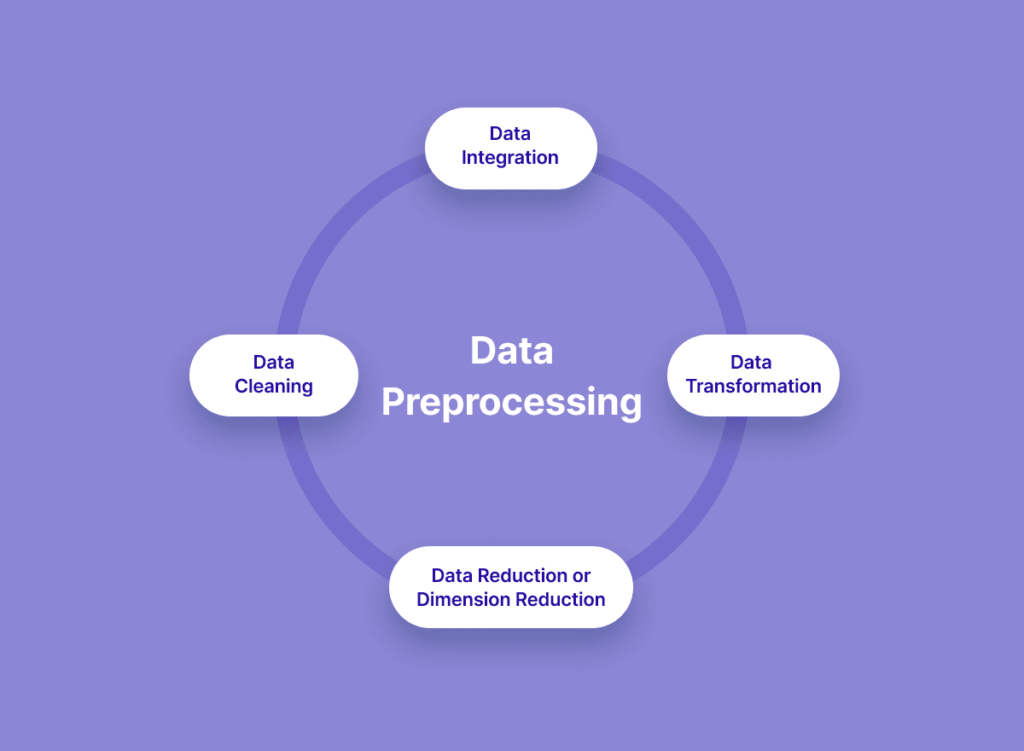

Understanding Data Preprocessing

Data preprocessing refers to the techniques used to prepare data for further analysis. This preparation can include a variety of steps such as

- Data cleaning

- Data integration

- Data transformation

- Data reduction

This multi-step process aims to ensure that data is accurate, complete, and suitable for the analytical tasks ahead.

The Importance of Data Cleaning

In big data environments, ensuring the cleanliness of data is paramount. Data cleaning involves identifying and correcting errors or inconsistencies in data. Common tasks in this phase include:

- Removing duplicates

- Handling missing values

- Correcting inconsistencies (e.g., different formats for dates)

- Eliminating outliers or erroneous data points

Incorporating effective data cleaning strategies not only improves the quality of the dataset but also enhances the reliability of the AI models that are trained using it.

Advantages of Data Integration

Data integration involves combining data from multiple sources to form a coherent dataset. In the context of big data, this can present unique challenges due to the variety of data formats and structures. The advantages of data integration include:

- A comprehensive view of the data landscape from multiple sources

- Improved decision-making based on enriched datasets

- Enabling advanced analytical capabilities by feeding algorithms with diverse data

The unification of datasets from various domains is crucial for developing robust AI solutions that accurately reflect real-world scenarios.

Data Transformation Techniques

Data transformation refers to modifying the data format or structure to make it suitable for analysis. This involves:

- Normalization: Scaling data to a standard range to reduce bias in AI algorithms

- Aggregation: Summarizing data, which can be crucial for big datasets to lower processing time

- Feature extraction: Identifying relevant features that can improve the effectiveness of machine learning models

Data transformation is vital for ensuring that AI models can efficiently process and analyze data without being overwhelmed by irrelevant information.

Data Reduction Techniques

With the exponential growth of data in big data systems, data reduction techniques have become increasingly essential. These techniques help in dealing with large volumes of data by:

- Reducing dimensionality: Techniques such as Principal Component Analysis (PCA) help lower the number of features without losing significant information

- Sampling methods: Selecting a representative subset of data for analysis

- Data compression: Reducing the size of the dataset while maintaining its integrity

Using effective data reduction methods enhances computational efficiency and reduces storage requirements, making it easier to scale AI applications.

Impact of Data Preprocessing on AI Models

The success of AI models is highly dependent on the quality of the raw data on which they are trained. The following are key impacts of data preprocessing:

Model Accuracy

Clean and well-prepared datasets lead to improved model accuracy. Data preprocessing ensures that AI algorithms learn from high-quality data, minimizing biases and errors. For instance, if a model is trained with incomplete data due to missing values, it may make inaccurate predictions.

Training Time

Efficient data preprocessing can significantly reduce the training time of machine learning models. A well-prepared dataset, devoid of extraneous noise and redundant information, allows algorithms to converge faster.

Generalizability

Through techniques like feature extraction and normalization, data preprocessing enhances the generalizability of AI models. This means that the models are less likely to overfit and can perform well on unseen data.

Challenges in Data Preprocessing

Despite its importance, data preprocessing is not without challenges:

Volume and Variety of Data

The sheer volume and variety of data in the big data landscape can complicate preprocessing. Developing scalable processes and automated workflows becomes crucial in handling large datasets efficiently.

Dynamic Nature of Data

In many instances, especially in real-time applications, data is continuously updated. Ensuring that preprocessing is adaptable to dynamic datasets can be challenging.

Skills and Expertise

Data preprocessing requires a blend of technical and domain-specific skills. Organizations may struggle to find qualified personnel who can effectively navigate the complexities of big data. Continuous training and knowledge sharing can mitigate this challenge.

Tools and Technologies for Data Preprocessing

- Apache Spark: A powerful open-source processing engine for large-scale data processing and analytics.

- Pandas: A data manipulation and analysis library for Python, ideal for cleaning and transforming data.

- Talend: An open-source software suite for data integration that streamlines the preprocessing pipeline.

- RapidMiner: A platform that provides advanced analytics, including data mining and machine learning.

Each of these tools has unique capabilities that cater to specific aspects of the data preprocessing phase in big data analytics.

Future Trends in Data Preprocessing

As the landscape of big data and AI continues to evolve, several trends are likely to shape the future of data preprocessing:

Automation and AI-driven Tools

The integration of artificial intelligence in data preprocessing tools is likely to lead to automation of repetitive tasks. This will allow data scientists to focus on more strategic activities.

Real-Time Data Processing

With the increasing demand for real-time analytics, preprocessing techniques will need to be adapted to process streaming data efficiently.

Greater Emphasis on Data Governance

As data privacy regulations become stricter, organizations will need to implement robust data governance frameworks. This includes ensuring that preprocessing methods comply with legal standards while maintaining data quality.

Conclusion

In the expanding field of big data and artificial intelligence, data preprocessing plays a pivotal role in determining the success of analytical endeavors. Understanding its significance and implementing effective strategies can lead to impactful insights, efficient operations, and enhanced AI performance.

Data preprocessing plays a critical role in AI and Big Data by ensuring that data is clean, formatted, and ready for analysis. It enhances the accuracy and effectiveness of AI models and Big Data analytics, ultimately leading to more informed decision-making and valuable insights. Emphasizing the importance of data preprocessing can significantly improve the outcomes of projects within the realm of Big Data.