In the realm of Big Data, the processing of vast amounts of textual information poses a significant challenge that necessitates advanced techniques to extract meaningful insights. Hierarchical Transformers, a cutting-edge technology in natural language processing, have emerged as a powerful tool for handling large-scale text data. By incorporating hierarchical structures into the traditional transformer architecture, these models are optimized for efficiently processing and analyzing massive volumes of text. This article delves into the pivotal role that Hierarchical Transformers play in addressing the unique demands of large-scale text processing within the context of Big Data analytics.

Understanding Hierarchical Transformers

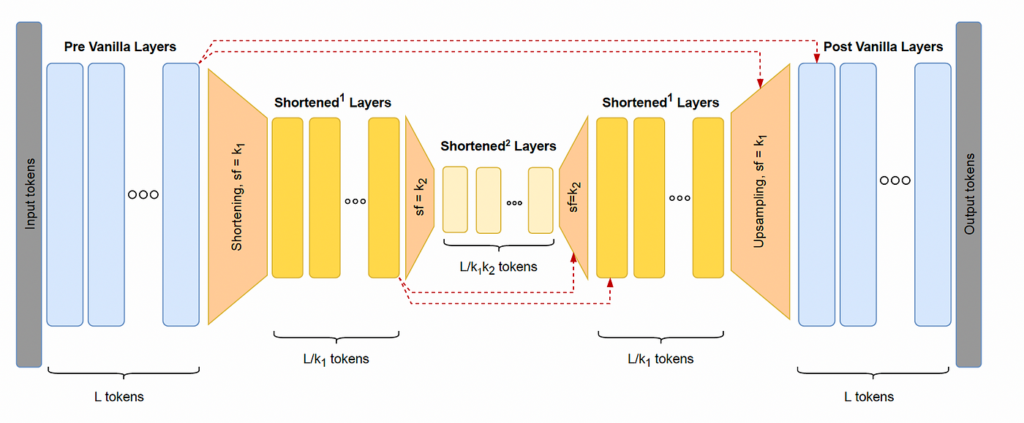

Hierarchical Transformers are an evolution of the traditional Transformer architecture, designed to handle large-scale text processing tasks more efficiently. These models process information in a hierarchical manner, allowing them to capture linguistic structures at multiple levels—from words to sentences to entire documents. This is crucial in the realm of Big Data, where managing and analyzing vast quantities of text data is essential.

Architecture of Hierarchical Transformers

The architecture of Hierarchical Transformers typically consists of two primary components: the word-level encoder and the sentence-level encoder. The word-level encoder operates on individual words by embedding them into dense vector representations. After that, the sentence-level encoder captures the relationships between those words, constructing a coherent representation for each sentence.

One of the critical innovations in hierarchical designs is their ability to aggregate information across different levels of structure. While standard Transformers process entire sequences at once, hierarchical models provide more granularity in understanding complex linguistic constructs. For example, they can effectively manage documents rich in structure, like scientific papers or legal texts.

Advantages of Using Hierarchical Transformers

Hierarchical Transformers offer several significant advantages over traditional transformer models:

- Improved Scalability: Hierarchical Transformers can scale better with large datasets, as they operate on smaller, more manageable structures rather than the whole dataset at once.

- Enhanced Contextual Understanding: By considering text at multiple hierarchical levels, they can capture nuances in meaning that might otherwise be missed, leading to improved performance in tasks such as text classification and sentiment analysis.

- Resource Efficiency: These models are generally more resource-efficient, reducing computational overhead during training and inference, which is vital when dealing with large-scale text data.

Applications in Large-Scale Text Processing

Hierarchical Transformers have a broad range of applications in large-scale text processing. Here are a few compelling examples:

1. Text Summarization

In situations where data volume is enormous, the ability to condense information is paramount. Hierarchical Transformers can generate concise summaries of large documents, making the main points easily accessible. This capability is especially important in industries like finance and healthcare, where professionals frequently analyze extensive reports.

2. Document Classification

Classifying documents into predefined categories is a critical task in text analytics. Using Hierarchical Transformers, companies can categorize massive amounts of unstructured textual data into relevant classes. For instance, legal firms can automatically classify contracts and legal documents, improving their workflow efficiency.

3. Information Retrieval

Efficiently retrieving information from large datasets can be challenging. Hierarchical Transformers enhance search algorithms by allowing them to understand the semantic relationships within text data. This is vital for applications in search engines and digital libraries where users seek specific information across vast document collections.

4. Sentiment Analysis

In a world where consumer feedback is abundant, understanding sentiment is crucial for businesses. Hierarchical Transformers can analyze reviews and feedback at different levels, providing companies with insights into customer emotions and opinions about products or services. This helps in shaping marketing strategies and enhancing customer satisfaction.

Challenges and Limitations

Despite their many advantages, Hierarchical Transformers are not without challenges:

- Model Complexity: The hierarchical structure can lead to increased complexity, making these models harder to implement and tune effectively compared to traditional Transformers.

- Data Dependency: Hierarchical Transformers require substantial amounts of labeled data to perform well. In scenarios where labeled data is scarce, their performance may significantly decrease.

- Training Time: Although they can be more efficient during inference, the training time for Hierarchical Transformers can be longer compared to simpler models due to their complexity and the additional layers involved.

Future Directions in Hierarchical Transformers

The field of hierarchical modeling is rapidly evolving, and several future directions can enhance the performance of Hierarchical Transformers in handling large-scale text processing tasks:

1. Integration with Other Neural Architectures

Combining Hierarchical Transformers with other neural network architectures, such as Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs), may provide further improvements in processing complex text data.

2. Fine-Tuning Pretrained Models

Utilizing pretrained models that have already learned general linguistic features can save time and resources. Fine-tuning these models on specific datasets can lead to enhanced performance tailored to individual use cases.

3. Exploring Multimodal Approaches

Hierarchical Transformers could also benefit from multimodal data, combining text with images or audio to create richer representations and improve understanding in tasks such as video content analysis.

4. Enhanced Training Techniques

Research into advanced training techniques, such as self-supervised learning or semi-supervised learning, may help overcome the challenge of limited labeled data while improving model robustness and accuracy.

The Importance of Hierarchical Transformers in Big Data

The role of Hierarchical Transformers in large-scale text processing is increasingly crucial in today’s data-driven world. By enhancing the efficiency and effectiveness of text processing, they become indispensable tools for organizations seeking to leverage the vast amounts of information generated daily.

The ability to analyze, classify, and derive insights from massive text data sets is essential for businesses across various industries. As these models continue to evolve, their contributions to the field of Big Data will only grow, further enriching the capabilities of data practitioners and researchers.

The emergence of hierarchical transformers has significantly advanced large-scale text processing in the realm of Big Data, offering improved efficiency and performance in handling massive volumes of textual data. By effectively capturing hierarchical dependencies and contextual information, hierarchical transformers demonstrate great potential in tackling complex text processing tasks and paving the way for more sophisticated applications in the field of Big Data analytics.